Website Header nennt man den sichtbaren Teil im obersten Bereich einer Webseite. Er enthält in der Regel wichtige Navigationselemente. ... Continue reading

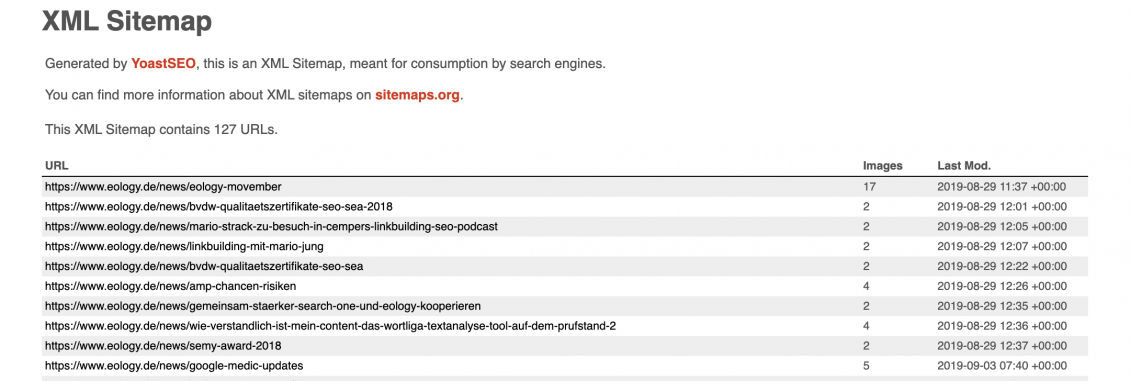

The XML sitemap is a text file and contains all the important URLs of a domain to make it easier for search engines such as Google to crawl the website. Either all sub-pages or only a certain selection of pages or paths to videos and images can be included in the XML sitemap. It is a document stored on the server and functions as a kind of table of contents for the website. However, an XML sitemap offers even more possibilities, especially to support the crawlers of search engines in their work.

Having a good XML sitemap online is very important, especially for SEO. It not only helps with the indexation of your own website but can also increase your position in the search results. It is worthwhile for SEO to generate an XML sitemap and also to maintain it. Because not only Google, but also other search engines access it and use it for effective crawling of the website.

It is very useful to support the crawler with the help of a sitemap, because crawling unnecessary subpages costs Google time and money. Therefore, Google and other search engines are always careful to scan only the most important and necessary sub-pages. On a large website with many sub-pages, the crawler cannot examine all of them before leaving the site again. Here, a sitemap can show the bot a structured procedure so that it recognizes which pages exist. Otherwise, the Googlebot is dependent on the internal links and may not find all relevant pages.

It is possible to provide additional information for the bot in the sitemap in order to provide Google with even more information about the respective sub-page. You can specify the date of the last update, the priority within the page structure and the frequency of changes. However, Google has already announced that they do not pay attention to the indication of priority or frequency of changes. The date of the last change is also mostly ignored. However, for serious changes, it can help to specify the date of change by <lastmod>. This makes it easier for Google to track a change to mobile friendly, for example, and it can then crawl all URLs again more quickly because the crawler does not have to find the links to the sub-pages through internal links first.

For this reason, it’s a good strategy to regularly update the XML sitemap so that the bots can also reliably index and crawl newly created pages. This is not the only reason to use tools and plug-ins that take over this process automatically and upload the sitemap partially ready.

Since a sitemap is usually very large, it is a good idea to have it generated automatically. After all, it contains all the important paths and links that may not be completely covered by a manually created sitemap. WordPress itself does not offer the possibility to generate a sitemap, but the plug-in Yoast supports users in SEO optimization and sitemap creation. There are also XML sitemap generators that create a complete page directory, and you then only have to upload the result to your own website.

Most plug-ins and CMSs with integrated sitemap creation also take care of uploading the sitemap with the correct path at the same time, so that it is also found by the search engine bots. However, it does not hurt to write a reference in the robots.txt and link the sitemap there. This is because most crawlers find the robots.txt as the first file and immediately receive the link to the sitemap there, so that they can take a closer look at it.

Once the sitemap has been created and uploaded, it is a good idea to submit it to Google’s Search Console. This makes it immediately available to Google and you can see when the sitemap was crawled. Now the algorithm can compare possible changes and recognize which new sub-pages have been added or which pages have not been crawled for a while. This increases the likelihood that the Google Bot will promptly crawl the page and especially the various sub-pages again or for the first time.

Olga Fedukov completed her studies in Media Management at the University of Applied Sciences Würzburg. In eology's marketing team, she is responsible for the comprehensive promotion of the agency across various channels. Furthermore, she takes charge of planning and coordinating the content section on the website as well as eology's webinars.

You want to learn more about exciting topics?